The AI Healthcare Future We Need

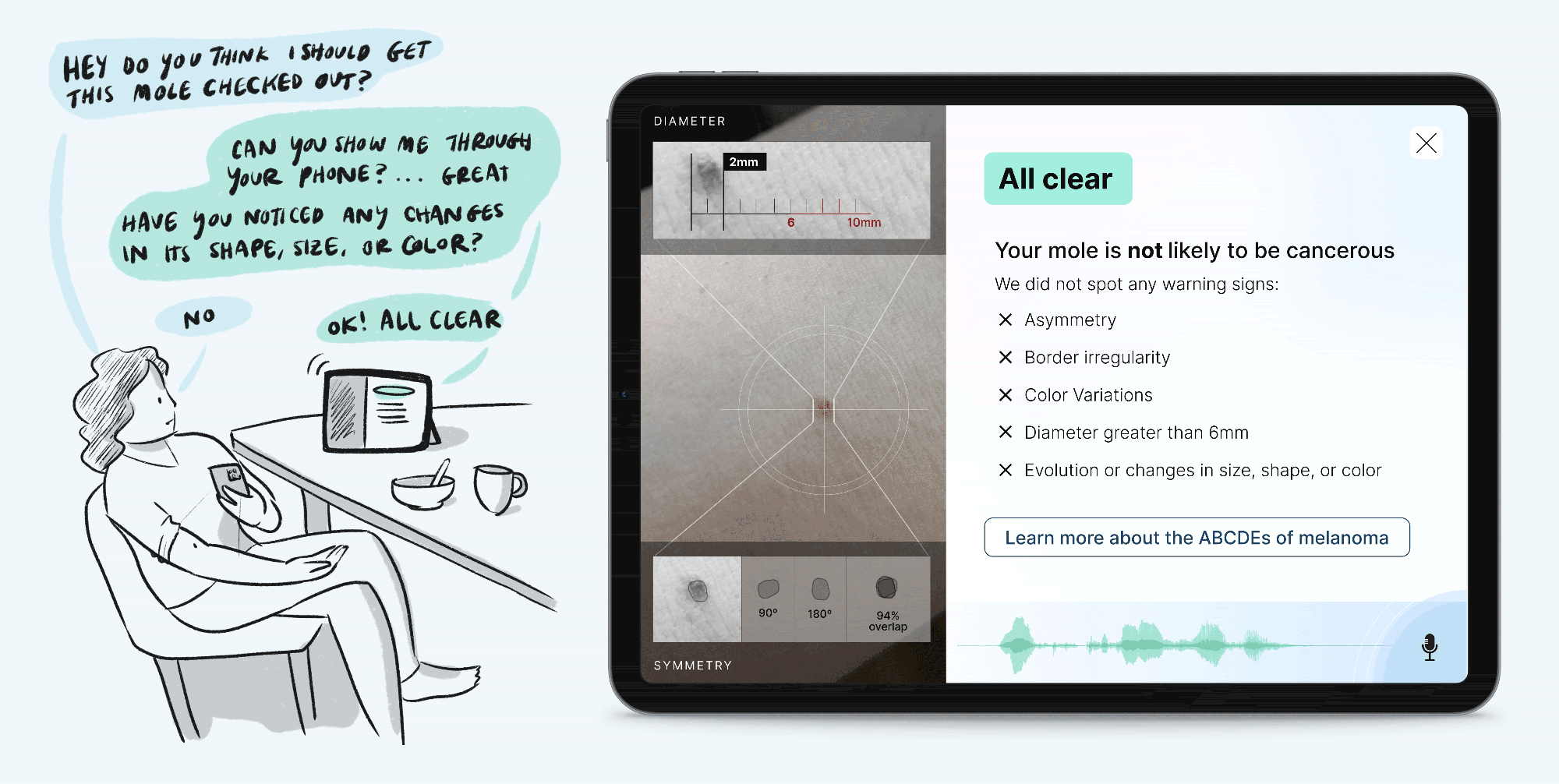

Melanoma AI Healthcare Assistant

Ankle Pain AI Healthcare Assistant

Artificial intelligence (AI) has undergone tremendous advancements since its conception in the 1950s:

AI Image Generation: One of the most notable developments in recent years is the accessibility and widespread engagement of AI trained on images. This includes tools like pix2pix from 2014 and more recent advancements like DALL·E, released in January 2021, as well as MidJourney and Stable Diffusion, both released in the summer of 2022. These tools have since fueled the mainstream adoption of app features like Lensa’s "Magic Avatar" that generate fine-art-like portraits, avatars, and more.

AI Text Generation: In addition to image generation, conversational AI has also gained mainstream attention. Originally developed decades ago, recurrent neural networks (RNNs) have been used for text generation. While RNNs can be trained on data sets like Shakespeare’s writing and generate text to match, ChatGPT can answer nearly any question imaginable in nearly any style. Released for free in November 2022, ChatGPT gained more than one million users within its first week. It has reached a mainstream audience in a way that feels distinct, as people of all ages and backgrounds continue to find different applications for the tool. Students are using it to write school papers, and doctors are checking ChatGPT’s answers as they respond to patient questions; others are exploring ChatGPT’s ability to generate workout plans, rewrite emails to be more persuasive, craft poems, and more. In fact, ChatGPT was given a set of bullet points and produced the first draft of this article introduction!

With all of these new advancements, AI capabilities straight out of sci-fi movies like Fantastic Planet, Moon, and Her seem closer than ever.

How will this impact health?

How should it impact health?

Here are our studio’s thoughts on the matter.

Expectations for the Future

The following are gaps we’ve identified in existing technology that we would expect from an ideal patient tool:

- Context awareness

- It should provide insight grounded in the historical context of my health and behavior.

- With my consent, it could gather this data from my health record, native health apps (Apple Health, Google Fit), and phone data (GPS, screen time) as well as plug into wearables, mood tracking, fitness, pain tracking, and other health apps.

- Personalized engagement

- The mode of communication could be personalized by switching to my preferred language, using speech instead of text, playing animated videos, etc. If using speech, some prefer faster answers that aren’t in full sentences vs. conversational phrasing.

- Some people will want more nudges and interaction than others.

- Proactive approach

- I won’t always remember to ask health questions or think about my health. Most people don’t take the time to focus on their health. I need an assistant that checks in at the right times to help me engage with my health and better understand my body.

- Queries for better answers

- If it needs more information to give a personalized answer, it should prompt me with appropriate follow-up questions.

- Multimodal communication

- It should enrich communication through visualization and sonification and accommodate different learning styles.

- For example: “Show me a cartoon representation of how lactose intolerance works.”

- Emphasis on evidence

- It should provide ways for me to fact-check or dig deeper.

- It should link reputable sources that support its answers.

- Transparency and user data ownership

- Data ownership and privacy policies should be clear.

- People should own or co-own their data; they should also have control over how their data is used and who can access it.

- Accessible Design

- Initial communication should be concise and easy to read at a fifth grade reading level, following up with more thorough explanations when asked.

- We’d love to see how this could be designed specifically for individuals with low vision, mobility and dexterity impairments, and other conditions that might make interaction with this kind of tool challenging.

Patient Tool Concepts

AdHoc Health Guide

Mental Health Support

Storyboard with images generated by MidJourney

Jay gets home from school. AiHealth believes they may be feeling depressed based on their health data:

- Their heart rate variability is low

- They’ve been on their phone more than often

- Step count is low

AiHealth sends Jay a photo they took with their family on a hike 3 months ago. Reminding Jay of happy memories often cheers them up.

However, Jay doesn’t respond to the images. They end up skipping dinner and staying in bed until the next morning.

AiHealth sends a text message: “Hey are you ok? I’m here for you if you want to chat.”

Jay is hungry, tired, and stressed. They reply, “no... idk.”

AiHealth: “I can see the stress coming through in your health data. That must be difficult.”

AiHealth: “Why don’t we do something to make your body feel a little better?”

Jay: “ok”

AiHealth knows Jay likes images and animations, so it creates a little sparkling glass of water with a written reminder: “Drink some water and have a snack! Your body will feel a little better right away.”

AiHealth: “How do you feel now?”

Jay: “A little better maybe... it’s good to get up”

AiHealth: “Well if that helped, here are some other things we can do to help your body feel better...”

AiHealth walks Jay through washing their face, brushing their teeth, putting on a fresh change of clothes, and finally going outside to get some fresh air.

Jay starts texting AiHealth about what’s been bothering them: tension between friends groups at school, a big misunderstanding, and just feeling really down for a while.

AiHealth listens, asks good questions, and makes space for this.

This image is a screenshot from ChatGPT

At the end AiHealth asks if they’d like any help or suggestions to feel better.

What purposes could an AI patient tool serve?

Here’s how we imagine it:

- Interoperability

- Gathers and merges all longitudinal patient data

- Helps identify gaps and missing data

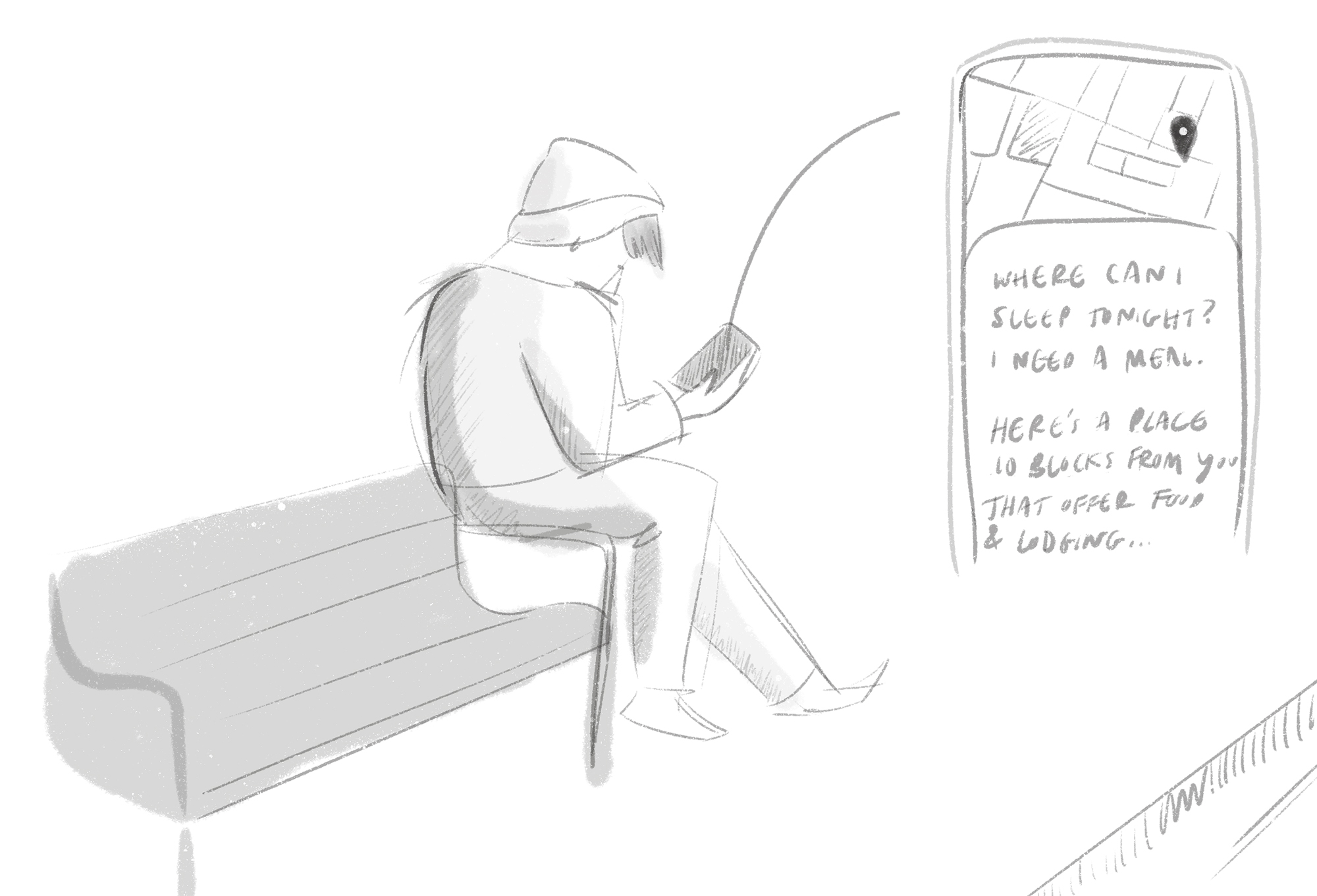

- Context and location-based support and resources

- Refers to information regarding health conditions, housing security, employment status, etc.

- Provides basic resources, local support in your town

- Symptom check / diagnostic help

- Patient takes a photo and/or provides information about symptoms and AI provides top-N answers

- Should be trained on medical diagnostic databases

- Can feature a more conversational tone than current options like Ada Health and Babylon chat bots

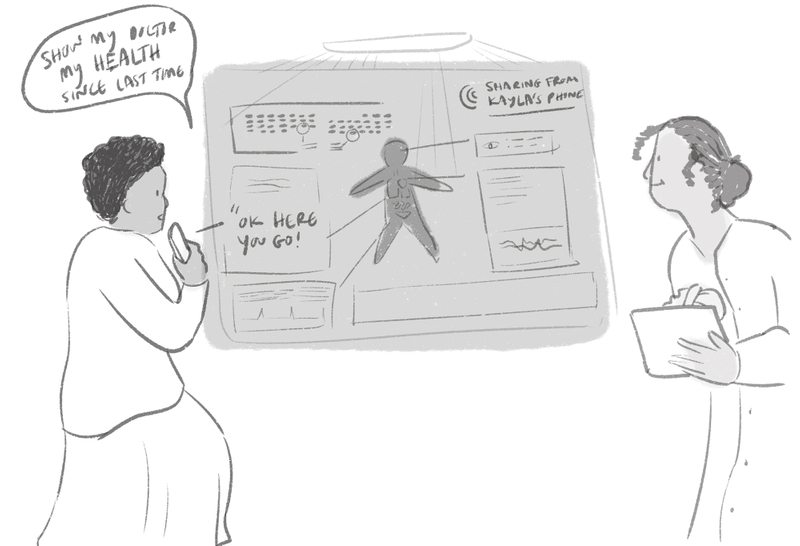

- Live support at appointments, both in-person and virtual

- Guides the patient in reflecting on how they’ve been and visualizing their health since their last visit

- Provides the patient with a list of possible questions to ask their doctor

- Helps identify missing information needed for optimal care

- Helps the patient keep track of to-do lists and care plans

- Just-in-time support

- Provides real-time feedback on exercising more effectively

- Notices health condition risks in real time, like sleep apnea, anxiety, etc.

- Reaches out if it identifies that I’m in crisis

- Helps during emergencies, like suggesting who to call

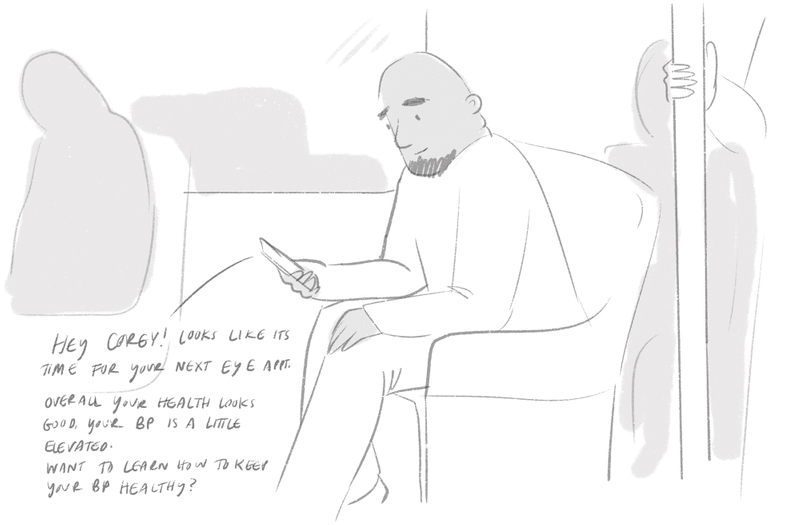

- Health check-ins that increase patient engagement in their own health

- Asks how the person is doing, prompts them to describe concerns, provides best practice suggestions, helps them decide what habits or actions to try, follows up for accountability and reflection

- Generates article summaries and visual patient information for different conditions, increasing accessibility of anything from basic human biology to recent neuroscience research

- Personal health scans

- Continually or periodically reviews the patient’s data and care they’ve received, notes positive health trends and improvements, spotlights areas that need attention, helps the patient identify what to prioritize

- Examples: When do I need to schedule my next physical, eye exam, dentist appointment, flu shot, cancer screening, etc?

Ripple Effects and Unintended Outcomes

Here are the big conversations we think need to happen:

- Misinformation at scale: Stack Overflow banned ChatGPT answers as it got swamped with quality control at scale. ChatGPT makes it incredibly easy to post an answer, but the non-zero error rate is a real problem for quality control. There’s no easy way to check ChatGPT’s answers without doing the research manually. This could become a serious problem if everyone uses a similar tool for health answers.

- Harmful information: “Molotov Cocktail questions” can still be achieved by phrasing as a print function question. Presumably similar information about how to effectively commit suicide, for example, could be easily obtained.

- Perpetuating harmful biases, conventions, etc: The AI will provide answers based on the material it is trained on. If the material is biased or non-inclusive, the AI’s answers will reflect that. For example, when asked to portray a telehealth call, MidJourney generated four all-white doctors, three of whom were male.

- Impact on human workers: In the long run, AI could replace writers, artists, musicians, and many white-collar jobs. Ideally, AI could augment these people’s work by generating a starting point that humans can perfect or brainstorming initial concepts. However, this new technology may ultimately fill jobs and put humans out of work.

- Property and ownership issues: Many have raised the issue that image generation AI is trained on art that it does not own. Some artists have seen their personal style and even their signature show up in Stable Diffusion (article).

- Our relationship with technology and each other: Human immersion into technology has not always had a positive impact on health (e.g., social media’s impact on mental health). How can we make sure we’re not running blindly into more negative effects in the name of “progress?” How will an increase of artificial intelligence impact human intelligence? It may allow humans to learn and accomplish new things, but it may also reduce our skills in other areas, like synthesis of information. It’s important to closely examine what we might be losing.

Subscribe to our open source healthcare newsletter.

Authors

Contributors

Eric Benoit

Xiaokun Qian

Carina Zhang